A Software Testing View on Machine Learning Model Quality

I’m planning a loose collection of summaries from lectures from my Software Engineering for AI-Enabled Systems course, starting here with with my Model Quality Lecture.

Model quality primarily refers to how well a machine-learned model (i.e., a function predicting outputs for given inputs) generalizes to unseen data. Data scientists routinely assess model quality with various accuracy measures on validation data, which seems somewhat similar to software testing. As I will discuss there are significant differences though, but also many directions where a software testing view likely can provide insights and directions beyond the classic methods taught in data science classes.

Note that model quality refers specifically to the quality of the model created with a machine-learning approach from some training data. The model is only one part of an AI-enabled system, but an important one. That is, I will not discuss the quality of the machine learning training algorithm itself, nor the quality of the data used for training, nor the quality of other pipeline steps or infrastructure used for producing the model, nor the quality of how the model is integrated into a larger system design— those are all important but should be discussed separately. Model quality is important when one needs an initial assessment of a model before going to production or if one wants to observe relative improvements from learning efforts or compare two models.

Traditional Accuracy Measures (The Data Scientist’s Toolbox)

Every single machine learning book and class will talk at length about how to split data into training and validation data and how to measure accuracy (focusing on supervised learning here).

In the simplest case for classification tasks, accuracy is the percentage of all correct predictions. That is, given a labeled validation dataset (i.e., multiple rows containing features and the expected output) one computes how well a model can match the expected output labels based on the features:

def accuracy(model, xs, ys):

count = length(xs)

countCorrect = 0

for i in 1..count:

predicted = model.predict(xs[i])

if predicted == ys[i]:

countCorrect += 1

return countCorrect / countA confusion matrix is often shown and on binary classification tasks, recall and precision are distinguished, discussing the relative importance of false positives and false negatives. When thresholds are used comparisons can be made across all thresholds using some area under the curve measures, such as ROC. For regression tasks, there is a zoo of accuracy measures that quantify the typical distance between predictions and expected values, such as Mean Absolute Percentage Error or Mean Squared Error. For ranking problems yet other accuracy measures are introduced, such as MAP@K or Mean Reciprocal Rank and some fields as Natural Language Processing have yet other measures for certain tasks.

For example, when predicting sales prices of houses, based on characteristics of these houses and their neighborhood, we could compare the models prediction with the actual price and compute that in the given example, we are barely 5% off on average: MAPE = (20/250+32/498+1/211+9/210)/4 = (0.08+0.064+0.005+0.043)/4 = 0.048.

Pretty much all accuracy measures are difficult to interpret in isolation. Is 5% MAPE for housing price predictions good or note? Accuracy measures are usually interpreted with regard to other accuracy measures, for example when observing improvements between two model versions. It is almost always useful to consider simple baseline heuristics, such as randomly guessing or “always predict average price in neighborhood”. Improvements can be well expressed as reduction in error, which is typically much easier to interpret than raw accuracy numbers: Reduction in error = ((1−baselineaccuracy)−(1−modelaccuracy)) / (1−baselineaccuracy). For example an improvement from 99.9 to 99.99% accuracy is a 90% reduction in error which may be a much more significant achievement than the 50% reduction when going from 50 to 75% accuracy.

On terminology: In the machine learning world, data scientists typically refer to model accuracy as performance (e.g., “this model performs well”), which is confusing to someone like me who uses the term performance for execution time. Then again, when data scientist talk about time, they use terms like learning latency or inference time. This probably shouldn’t be surprising given the many different meanings of “performance” in business, art, law, and other fields, but be aware of this ambiguity in interdisciplinary teams and be explicit about what you mean by “performance”.

Analogy to Software Testing

It is tempting to compare evaluating model quality with software testing. In both cases, we execute the system/model with different inputs and compare the computed outputs with expected outputs. However there are important conceptual differences.

A software test executes the system in a controlled environment with specific inputs (e.g., a function call with specific parameters) expects specific outputs, e.g. “assertEquals(4, add(2, 2));”. A test suite fails if any single one of the tests does not produce the expected output. Testing famously cannot assure the absence of bugs, just show their presence.

Validation data though plays a very different role than software tests. Even though validation data provides inputs and expected outputs, we would not translate the housing data above into a test suite, because it would fail the entire test suite on a single non-perfect prediction:

assertEquals(250000, model.predict([3, .01, ...])); assertEquals(498000, model.predict([4, .01, ...])); assertEquals(211000, model.predict([2, .03, ...])); assertEquals(210000, model.predict([2, .02, ...]));In model quality, we do not expect perfect predictions on every single data point in our validation dataset. We do not really care about any individual data point but about the overall fit of the model. We might be perfectly happy with 80% accuracy and we are well aware that there may be even incorrect labels or noisy data in training or validation set. A single wrong prediction is not a bug.

In fact, as I argue in Machine Learning is Requirements Engineering, the entire notion of model bug is problematic and comes from pretending that we have some implicit specifications for the model or from confusing terminology for validation and verification. We should not ask whether a model is correct but how well it fits the problem.

Performance testing might be a slightly better analogy. Here we evaluate the quality of an implementation with regard to execution time, but usually without specifications, while accepting some nondeterminism and noise, and without expecting exact performance behavior. Instead, we average over multiple executions, possibly over multiple executions with diverse inputs — not unlike evaluating accuracy by averaging over validation data. We may set expectations for expected performance (regression tests) or simply compare multiple implementations (benchmarking).

@Test(timeout=100)

public void testCompute() {

expensiveComputation(...);

}On terminology: Strike the term model bug from your vocabulary and avoid asking about correctness of models, but rather evaluate fit, credibility, or accuracy — or, I guess, performance. Prefer “evaluate model quality” or “measure model accuracy” over “testing a model”. The word testing simply brings too much baggage.

Curating Validation Sets (Learning from Test Case Selection)

Even though the software testing analogy is not a great fit, there are still things we can learn from many decades of experience and research on software testing.

In general, if we want to use the testing analogy, I think a validation set consisting of multiple labeled data points corresponds roughly to a single unit test or regression test. Whenever we want to test the behavior of a specific aspect of the model, we want to do so with multiple data points. Whenever we want to understand model quality in more detail, we should do so with multiple validation sets. When we evaluate model accuracy only with a single validation set, we are missing out on many nuances and get only a very coarse aggregate picture.

The challenge then is how to identify and curate multiple validation sets. Here software engineering experience may come in handy. Software engineers have many strategies and heuristics to select test cases, that may be helpful also for curating validation data. While traditionally validation data is selected more or less randomly from a population of (hopefully representative) datapoints when splitting it off training data, not all inputs are equal. We want multiple validation sets to represent different tests:

- Important use cases and regression testing: Consider a voice recognition for a smart assistant. Correctly recognizing “call mom” or “what’s the weather tomorrow” are extremely common and important use cases that almost certainly should not break, whereas wrong recognition of “add asafetida to my shopping list” may be more forgivable. It may be worth having a separate validation set for each important use case (e.g., consisting of multiple “call mom” recordings by different speakers in different accents) and create a regression test that expects a very high accuracy for these, much higher than expected from the overall validation set. There is some analogy here to unit tests or performance regression tests, but again accuracy is expressed as a probability over multiple inputs representing a single use case.

- Representing minority use cases and fairness: Models are often are much more accurate for populations for which more training data is available and often do worse on minorities or less common inputs (e.g., voice recognition for speakers with certain accents, face recognition for various minorities). When minorities represent only a small fraction of the users, low accuracy for them lowers overall accuracy on large representative validation sets only marginally. Here it is useful to collect a validation set for each important user group, minority, or use case — again consisting of many data points representing this group. Checking outliers, checking common characteristics of inputs leading to wrong predictions, and monitoring in production can help to identify potential subgroups with poor performance.

- Representing important domain concepts: Machine learning builds models that best fit a training dataset, but it does not necessarily learn concepts that humans would consider as important for the task. For example, a deep-learning sentiment analysis model for text may usually pick up on negations and be robust to typos in the training dataset, but it is not clear that it learns the actual concepts of negation and typos that would generalize beyond the distribution of the training and validation data. Here it is possible to identify domain knowledge that the model should learn and curate datasets to specifically test how well the model does on those important tasks, for example, systematically curating data to check how well the model handles negation and double negation, typos, and other important domain concepts.

- Setting stretch goals: Some inputs may be particularly challenging and we might be okay with the model not performing well on them right now (e.g., speech recognition on low quality audio). It can be worth to separate out a validation set for known challenging cases and to track them as stretch goals — that is we are okay with low accuracy right now, but we’ll track improvement over time.

So how do we find important problems and subpopulations to track? There are many different strategies: Sometimes there are requirements and goals for the model and the system that may give us insights. Sometimes we can decompose a task and identify important domain concepts that are needed to do well. Experts may have experience with similar systems and their problems. Studying the distribution of mistakes in the existing validation data can help. User feedback and testing in production can provide valuable insights about poorly represented groups.

In general several classic black-box testing strategies can provide inspiration: Boundary value analysis and equivalence partitioning structure the input space and select test cases based on requirements — similarly we may be able to more systematically think through possible user groups, task concepts, and problem classes that we are trying to solve and curate corresponding validation sets. Also combinatorial testing and decision tables are well understood techniques to look at combinations of characteristics that may help to curate validation sets.

On this topic of validation set curation, see also Hulten, Geoff. “Building Intelligent Systems: A Guide to Machine Learning Engineering.” Apress, 2018, Chapter 19 (Evaluating Intelligence). The closest discussion on the importance of testing domain concepts I’m aware of is Ribeiro et al.’s 2020 ACL paper Beyond Accuracy: Behavioral Testing of NLP Models with CheckList where they discuss different “capabilities” such a model should have with distinct test sets. The notion of testing models for specific domain concepts seems to gain some traction in the academic ML literature under the term “stress testing” (don’t get me started on naming here) as discussed in D’Amour et al.’s 2020 article Underspecification presents challenges for credibility in modern machine learning.

Automated (Random) Testing

A popular research field is to automatically generate test cases, known as automated testing, fuzz testing, random testing. That is, rather than writing each unit test by hand, given a piece of software and maybe some specifications, we automatically generate (lots of) inputs to see whether the system behaves correctly for all of them. Techniques range from “dumb fuzzing” where inputs are generated entirely randomly to many smarter techniques that use dynamic symbolic execution or coverage guided fuzzing to maximally cover the implementation. This has been very effective to find bugs and vulnerabilities in many classes of software systems, such as unix utilities and compilers. So would this also work to assess model quality?

Its trivial to generate thousands of validation inputs for a machine-learned model — e.g., sampling uniformly across all features, sampling from known distributions for each features, sampling from a joint probability distribution of all features (e.g., derived from real data or modeling with probabilistic programming), or inputs generated by mutating real inputs. The problem is how to get the corresponding labels to determine whether the model’s prediction is accurate on these generated inputs.

The Darn Oracle Problem

The problem with all automated testing techniques is how to know whether a test passes or fails, known as the oracle problem. That is, whether software testing or model evaluation, we can easily produce millions of inputs, but how do we know what to expect as outputs?

There are a couple of common strategies to deal with the oracle problem in random testing:

- Manually specify outcome —humans can often provide the expected outcome based on their understanding or specification of the problem, but this obviously does not scale when generating thousands of random inputs and cannot be automated. Even when crowdsourcing the labeling in a machine-learning setting, for many problems it is not clear that humans would be good at providing labels for “random” inputs.

- Comparing against a gold standard — if we have an alternative implementation (typically a slower but correct implementation we want to improve upon) or an executable specification we can use those to simply compute the expected outcome. Even if we are not perfectly sure about the correctness of the alternative implementation, we can use it to identify and investigate discrepancies or even vote when multiple implementations exist. Forms of differential testing have been extremely successful, for example, in finding compiler bugs. Unfortunately, we usually use machine learning exactly when we don’t have a specification and no existing good solution, so it is unlikely that we’ll have a gold standard implementation of an image recognition algorithm or recidivism prediction algorithm (aside from testing in production with some telemetry data, more on that another time).

- Checking partial specifications and global invariants— even when we do not have full specifications about a problem and the expected outcomes, we sometimes have partial specifications or global invariants. Most fuzzers look for crashing bugs or violations of other global invariants, such as unsafe memory access. That is, instead of checking that, for a given input, the output matches some expected output, we only check that the computation does not crash or does not violate any other partial or global specifications we may have (e.g., all opened file handles need to be closed). Developers can also manually specify partial specifications, typically with assert statements, for example for pre- and post-conditions of functions and data and loop invariants (though developers are often reluctant to write even those). Assert statements turn runtime violations of these partial specifications into crashes, which can then be detected for random inputs. Notice that partial specifications only check some aspects of correctness, as they focuses only on some aspect of correctness that should hold for all executions. Interestingly, there are some partial specifications we can define for machine-learned models that may be worth testing for, such as fairness and robustness, which is where automated testing may be useful.

- Simulation and inverse computations — in a few scenarios it can be possible to simulate the world to derive input-output pairs or it may be easier to randomly select outputs and derive corresponding inputs. For example, when performing prime factorization, it is much easier to pick a set of prime numbers (the output) and compute the input by multiplying them. Similarly, one could create a scene in a raytracer (with known location of objects) and then to render the image to create the input for a vision algorithm to detect those images. This seems to work only in few settings, but can be very powerful if it does because we can automatically generate input-output pairs.

Invariants and Metamorphic Testing

For evaluating machine-learned models, while it seems unclear that we will ever be able to automatically generate input-output pairs beyond few simulation settings, it is worth to think about invariants, typically invariants that should hold over predictions of different or related inputs. Here are a couple of examples of invariants for a model f:

- Credit rating model f should not depend on gender: Forall inputs x and y that only differ in gender f(x)=f(y) — even though this is a rather simplistic fairness property that does not account for correlations

- Sentiment analysis f should not be affected by synonyms: For all inputs x: f(x) = f(x.replace(“is not”, “isn’t”))

- Negation should swap meaning: For all inputs x in the form “X is Y”: f(x) = 1-f(x.replace(“ is “, “ is not “))

- Small changes to training data should not affect outcome (robustness): For all x in our training set, f(x) = f(mutate(x, δ))

- Low credit scores should never get a loan (sufficient classification condition, invariant known as anchor): For all loan applicants x: (x.score<645) ⇒ ¬f(x)

Identifying such invariants is not easy and requires domain knowledge, but once we have such invariants, automated testing is possible. They will only ever assess one aspect of model quality, but that may be an important aspect. It may even correspond to expected capabilities of the model, such as negation, typo robustness, and gender fairness in many natural language processing tasks (some examples). Likely invariants can be mined automatically from data, see the literature on specification mining and anchors. Such invariants are typically known as metamorphic relations in the software engineering literature, but rarely discussed in the machine learning literature. In its general form, a metamorphic relationship is a invariant in the form of ∀x.f(gI(x))=gO(f(x)) where gI and gO are two functions (e.g. gI(x)=x.replace(“ is “, “ is not “) and gO(x)=1-x).

Once invariants are established, generating lots of inputs is the easy part. Those could be random inputs, or inputs generated by changing data from existing datasets. Using techniques of adversarial learning and moving along the gradients of models, there are also often techniques that find inputs that invalidate the specifications much more effectively than random sampling. For some problems and models it is even possible to formally verify that a model meets the specification for certain kinds of inputs.

Note that this view of invariants also aligns well regarding machine learning as requirements engineering. Invariants are partial specifications or requirements and automated testing helps to check the compatibility of multiple specifications.

Adequacy Criteria

Since software testing can only show the presence of bugs and never the absence, an important question is always when to stop testing, i.e., are the tests adequate. There are many strategies to approach this question, even though typically one simply pragmatically stops testing when time or money runs out or it feels “good enough”. In machine learning, potentially power statistics could be used, but in practice simple rules of thumb seem to drive the size of validation sets used.

More systematically, different forms of coverage and mutation scores are proposed and used to evaluate the quality of a test suite in software testing:

- Specification coverage analyzes whether all conditions of the specification (boundary conditions, etc) have been tested. Given that we don’t have specifications for machine-learning models, the best we can do is to think representativeness of validation data and about subpopulations, capabilities, and use cases when creating multiple validation sets.

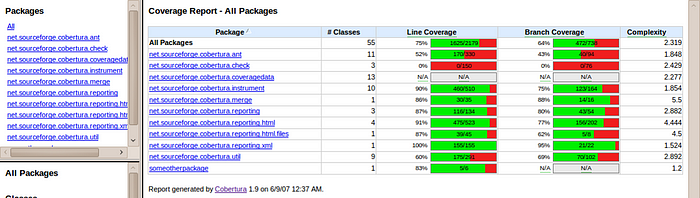

- White-box coverage like line coverage and branch coverage gives us an indication of what parts of the program have been executed. There have been attempts to define similar coverage metrics for deep neural networks (e.g., neuron coverage), but it is unclear what this really represents. Are inputs generated to cover all neuron activations representative of anything or useful to check invariants with automated testing? It seems to early to tell.

- Mutation scores indicate how well a test suite detects injected faults (mutants) into the program. A better test suite would detect more injected faults. However, again a mapping to machine-learned models is unclear. Would better curated validation sets catch more mutations to a machine learning models and would this be useful in practice for evaluating the quality of the validation sets or similar?

While there are several research papers on these topics, there does not seem to be a good mapping to problems in evaluating model quality, given the lack of specifications. I have not found any convincing strategy to evaluate the quality of a validation set beyond checking whether it is representative of data in production (qualitatively or statistically).

Test Automation

Finally it’s worth pointing to a vast amount of work in test automation in software testing that automatically execute the test suite on changes (smart test runners, continuous integration, nightly builds) with lots of continuous integration tools to run tests independently, in parallel, and at scale (Jenkins, Travis-CI, and many others). Such systems can also track test outcomes, coverage information, or performance results over time.

Here we find a much more direct equivalent for model quality and also many existing tools. Ideally the entire learning and evaluation pipeline is automated so that it can be executed and tracked after every change. Accuracy can be tracked over time, actions can be taken automatically when significant drops in accuracy are observed. Many dashboards have been developed both internally (e.g., Uber’s Michelangelo), but also many open source and academic solutions exists (e.g., MLflow, Neptune, TensorBoard) and with suitable plugins even traditional continuous integration tools like Jenkins can record accuracy results over time.

Summary

So in summary, there are many more strategies to evaluate a machine-learned model’s quality than just traditional accuracy metrics. While software testing is not a good direct analogy, software engineering provides many lessons about curating multiple(!) validation datasets, about automated testing for certain invariants, and about test automation. Evaluating a model in an AI-enabled system should not stop with the first accuracy number on a static dataset. Indeed, as we will see later, testing in production is also and probably even more important.